Practical Guide to Generative AI Risks and Mitigations for Enterprise

- Nox90

- May 7, 2023

- 10 min read

Executive Summary

Today, as organizations adopt and integrate advanced AI technologies such as OpenAI and ChatGPT, companies worldwide taking steps to control how their employees utilize OpenAI at work. As with any new technology, Generative AI models like ChatGPT can provide both benefits and risks.

Though it is a powerful tool, it is important to assess how it can fit into organizational workflows in a safe and effective manner. When it comes to the use of AI tools like ChatGPT in organizations, there are several potential risks and considerations to keep in mind. Some of these risks include: Data Exposure and Privacy regulatory violations, intellectual property theft, confidentiality breaches, inaccurate results, and Bias in GenAI.

In order to minimize the exposure of the organization to security risks, guidelines and safeguards regarding the use of AI models such as ChatGPT should be considered.

This document will first present the main uses that employees perform with GenAI like ChatGPT, then some of the main risks that those uses might bring, and will suggest a number of mitigations and controls for the security teams in the organization to adopt. Of course, taking into account the effect on the efficiency of the work that may be affected by the implementation of certain safeguards.

The last chapter will offer best practices that security teams can use to instruct developers, regarding working with ChatGPT.

Fast-Rising Usage of ChatGPT in the Organization

ChatGPT, the popular chatbot from OpenAI, is estimated to have reached 100 million monthly active users in January, just two months after its launch, making it the fastest-growing consumer application in history. According to the latest available data, ChatGPT currently has over 100 million users. The website currently generates 1 billion monthly visitors (1.6 billion in March 2023), 25 million daily visitors, and an estimated 10 million queries per day.

Enterprises are increasingly embracing generative AI, and ChatGPT in particular, as strong tools for:

Writing code and scripts in different programming languages.

Creating images, videos, reports, and presentations.

Streamline external communications (alter content for various mediums, including social media posts, and emails).

Improving customer service (handling a large volume of customer requests and processing customer data).

Writing articles and documents.

Conducting initial research.

Various daily tasks.

In particular, programming and developer software has emerged as one of ChatGPT’s main use cases.

Risks Surrounding Different Types of Usage

Due to its popularity and ease of use, generative AI has promptly brought new security threats. Here are some of the risks associated with the use of GenAI, such as ChatGPT:

Data Exposure; Privacy, and Confidentiality - Enterprise use of GenAI may lead to access to and processing of sensitive data, intellectual property, source code, trade secrets, and other data, including customer or private information and confidential information. Sending confidential and private data outside of the organization could trigger legal, compliance, and information exposure issues.

Sharing any confidential customer data, partner information, or PII may violate your organization's agreements with customers and partners, since you may be contractually or legally required to protect this data.

ChatGPT records everything users type into it. When using ChatGPT, it may collect personal information from messages, any files uploaded, and any feedback provided. It also states that conversations may be reviewed by its AI trainers to improve the chat and train the system.

Secure Code and Software Development - Many programmers have started using ChatGPT as a research source for their day-to-day work. One of the biggest risks of using ChatGPT for software development is the potential for the platform to generate code that is vulnerable to security threats, weaknesses, and exploits. AI-generated code should not be used and deployed without a proper security audit or a code review to find vulnerable or malicious components.

Developers should be very careful when copying code from ChatGPT or any other source since the quality of the code produced can be poor, which can lead to software vulnerabilities and security weaknesses such as XSS Attacks, SQL Injection, Path Traversal, etc.

Supply Chain Risks - Supply chain risks can be found when using generative AI. ChatGPT, for example, is a third-party service similar to various SaaS services that organizations consume. It is highly recommended that while choosing a service or supplier, a risk assessment procedure should be carried out by the organization regarding that desired service or supplier.

TPRM process can include an examination of standards, procedures, policies, risk surveys, and penetration test reports, as well as questionnaires, passive scans, and gathering information that can reflect the level of security of that service provider. This evaluation reflects the risks the organization is exposed to by contracting with that service provider.

Due to the fact that GenAI such as ChatGPT is innovative and highly accessible, it can be said with a fairly reliable level of certainty, that organizations did not carry a proper risk assessment process before started working with it. which may expose them to supply chain security threats such as Prompt Injection Attacks, in which attackers can bypass expected AI behavior or make AI systems perform unexpected jobs. Prompt injection attacks can be used to gain unauthorized access to confidential data, cause the AI model to behave in unexpected or harmful ways, and take actions that are against its design objectives.

Intellectual Property (Copyright and Ownership) - Using the output of Generative AI could risk potential claims of copyright infringement, due to the training of some GenAI models on copyrighted content.

AI models can also generate new content that is similar to existing content. There is a risk that they could be used to create content that infringes on someone else's intellectual property. For example, a GenAI model could be used to create a product design that is similar to an existing design, leading to a potential patent infringement claim.

Terms of service indicate that ChatGPT output is the property of the person or service that provided the input. Complications arise when that output includes legally protected data that was gathered from the input of other sources. For example, in case an employee input the organization’s security policy and ask an AI to paraphrase it. The output might be better, but AI can collect this data for future responses. Currently, there is a lack of definitive case law in this area to provide clear guidance for legal policies.

Inaccurate Results and Bias - Generative AI like ChatGPT may be prone to bias and can reflect the biases of the data they are trained on. This can lead to unfair or discriminatory outcomes and, or illegal content. Unfortunately, Generative AI does not distinguish truth from false, and many of ChatGPT’s answers are open to interpretation. Users must keep in mind that content inaccuracies may lead to illegal discrimination, potential damage to reputation, and possible legal repercussions for the organization.

Risk Appetite and Mitigations

Despite the risks involved, the use of Generative AI like ChatGPT can also provide significant benefits for organizations, in its technological advancement and in improving the team's daily tasks. To ensure the safe and effective use of AI tools, organizations should carefully evaluate the risks and benefits, implement appropriate safeguards and controls, and ensure ongoing monitoring and evaluation of the AI tool's performance.

A certain organization characterized by a low-risk appetite can adopt a much stricter policy, blocking access to GenAI URLs, examining each request individually, and allowing access only as needed - to specific users and specific AI models.

While a less sensitive organization might see the technological value of GenAI in a competitive environment, allowing access to AI websites, providing training and guidance have been given to its staff.

Another approach can be to allow access but with certain restrictions, for example, DLP policies blocking sensitive data upload.

In the next chapter, we will offer a number of mitigations and controls for the security teams of the organization. It is important to understand that the implementation of some or many protections may have an impact on work performance, so it is important that every organization choose the ones that have the most relevance to its approach and strategy.

Suggested Mitigations and Controls

Access Restriction

Low-Risk Appetite Companies

One of the main concerns is understanding AI capabilities, and making sure that the company's employees use Generative AI models like ChatGPT in a safe manner. Although AI tools offer sophisticated technological progress, employees must be aware of the Abilities, limitations, and Risks it might expose the organization to. Therefore, in the first instance, we will recommend to organizations that have not yet studied the topic or have not yet implemented safeguards, to block access or/and grant access only to those who require it and have undergone proper training. This will provide the time to research the developing field, its advantages, disadvantages, and to get an educated decision.

There are several ways in which organizations can carry out such a restriction, here are several suggestions categorized by Risk appetite. Meaning the degree of risk that the organization is willing to accept in order to allow the use of GenAI. A certain organization can decide that the risk associated with the use of GenAI like ChatGPT is high, and decide to block access approving each request to allow the use only after examining it and guiding the requester. This will be a Low-Risk Appetite company. While others with High-Risk Appetite will prefer to accept the risks in order to facilitate the use seeing the value it brings to the organization's teams and tasks.

Enterprise Web Gateway Proxy - This way it's possible to create a web filter policy to Deny access to a single URL or a whole category.

Zero Trust Browser - Solution that shifts from tools like VPN/VDI such as Surf Security and TALON, which manage the browser and the web traffic of the endpoint.

Firewall - FW allows web filtering and blocking access to websites based on URL, Category, or Application.

Block and report unauthorized users attempting to access GPT URLs.

Suggested URLs:

ChatGPT – https://chat.openai.com

Google Bard – https://bard.google.com

Bing AI – https://bing.com

ChatGPT API – https://api.openai.com

Wand AI - https://wand.ai/

Glean AI - https://www.glean.ai/

Hugging Face - https://huggingface.co/

Bearly AI - https://bearly.ai/

Base 64 AI - https://base64.ai/

Nanonets AI - https://nanonets.com/

Supernormal AI - https://supernormal.com/

Ask your PDF AI - https://askyourpdf.com/

Palm AI - https://palmai.io/

Midjourney AI - https://www.midjourney.com/

GPTE AI - https://gpte.ai/

Training and Awareness

Low-Medium Risk Appetite Companies

Organizations should instruct and train their staff on how to manage sensitive information such as confidential customer data, PII, employment candidates, internal business information - trade secrets, financial data, etc. If a query submits specific information about a customer or candidate, this, as well as the answer, can then be shared with anyone. Inquiries concerning technological issues may indicate a company development path that is of interest to the organization.

Data once entered into ChatGPT, becomes part of the chatbot's data model and can be shared with others who ask relevant questions, resulting in data leakage.

Additionally, If Generative AI security is breached, content that a company may have been contractually or legally bound to protect might leak and be associated with the firm causing reputation damage. Equally important is to recognize that GenAI like ChatGPT is capable of outputting Inaccurate Results and Bias. Therefore, outputs should be checked and verified and not relied upon without further inspection. Ensure employees are aware of these issues and provide training on best practices for secure and responsible usage. Encourage reporting of security concerns or incidents involving ChatGPT or other AI tools.

Data Protection (DLP)

Protect PII and other sensitive information by enforcement. Employee training is of great importance, but it cannot be completely relied upon, and dedicated measures must be enforced. If an organization already has an existing policy to prevent Data Leakage, they must also enforce it upon Generative AI models like ChatGPT. If not, a policy must be created, implemented, and enforced. This can be done in a number of ways (differing by the system), here are a few:

Low-Risk Appetite Companies

Clipboard Protection - Blocks the use of the clipboard to copy sensitive data.

Web Protection - Blocks sensitive data being posted to websites.

Block and report on users copying sensitive data into a browser prompt in GPT URLs.

Block and report on users copying sensitive data from IDE (Visual Studio) into a browser prompt.

Medium-Risk Appetite Companies

Clipboard Protection - Monitor the use of the clipboard to copy sensitive data.

Web Protection - Monitor sensitive data being posted to websites.

Report on users copying sensitive data from IDE (Visual Studio) into a browser prompt.

Implement Access Control

Low-Medium Risk Appetite Companies

After implementing the proposed protections, such as DLP policy, and after conducting training and awareness for those employees who have been filtered as having a real need for access to AI tools, a group must be created for these particular users that will be managed and monitored.

This group then should be added to Allow Rule Policy that will grant access to essential AI URLs. As proposed and varies according to the system through which they apply: FW, Web Gateway Proxy, Zero Trust Browser, etc.

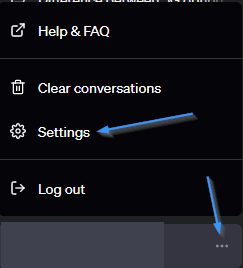

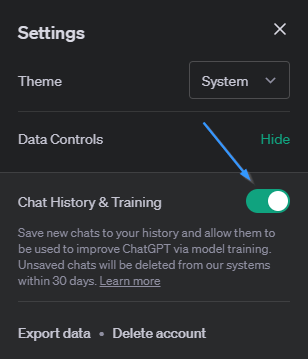

Chat History and Training - OpenAI Controls

Low-Medium Risk Appetite Companies

Manage your data - by default, ChatGPT saves user questions and the answers that are generated in response and use the data to train its AI models.

OpenAI suggests new controls that enable users to turn off their chat history:

Conversations that take place while chat history is disabled won't appear in the “history” sidebar, and OpenAI won’t use the data to train its AI models.

The new settings enable users to have their chat histories deleted after 30 days, OpenAI will only review them if it is necessary to monitor for abuse.

It is recommended to Disable Chat History:

It is necessary for enterprises to stay up-to-date on AI security and for future controls and services, such as ChatGPT Business.

According to OpenAI, ChatGPT Business will be geared toward “professionals who need more control over their data as well as enterprises seeking to manage their end users.”

That suggests the service could include additional user management features. Such features might help administrators more easily perform tasks such as creating accounts for a company’s employees.

AI-Generated Code

An AI system feeds and learns from open source. Hence is also likely it will create code that is not secure since open source code might contain vulnerabilities. It is advised to evaluate any AI-generated code before using it and integrating it into production.

Code generated by Generative AI shouldn't be used and deployed without a proper security audit or a code review, inspect for vulnerable or malicious components. Here is a list for security teams to use in instructing developers, regarding working with ChatGPT.

Best Practices When Using Code from AI Tools:

Never Trust, Always Verify - Take produced outputs by ChatGPT as a suggestion that should be verified and checked for accuracy.

Validate the solution against another source such as a community that you rely on.

Ensure the code follows best practices, following the principle of least privilege for providing access to databases and other critical resources.

Check the code for any potential vulnerabilities, by using codeQL and Trivey.

Make sure to implement a secure package for each code with ESAPI, AntiSamy, and Cerberus frameworks in order to cover input validation and sanitation.

Make sure that only a whitelist is being implemented and not a blacklist.

Make sure that the server side will conduct input validation as a mandatory requirement.

Pay attention to the content you enter into ChatGPT. Be cautious when utilizing very sensitive inputs because it's unclear how safe the data you enter into ChatGPT is.

Make sure to remove any secrets at code (even if they are encoded in base64). All secrets should be stored in a secured vault.

Make sure you're not unintentionally disclosing any personal data that could violate compliance rules like GDPR.

About NoX90

Nox90 is a leading security company specialising in DevOps & cloud environments. We are unique in our ability to package leading security solutions with deep DevOps and security knowledge and experience.

Our clients are large organisations and advanced startups with varying levels of security maturity – wishing to work faster, better and safer at building and updating their online environment.

“No other professional services company was able to audit our automation and talk to DevOps guys like Nox90 did”

Head of security, major international bank

Comments